Generate Explanations for Time-series classification by ChatGPT

Image credit: Unsplash

Image credit: UnsplashAbstract

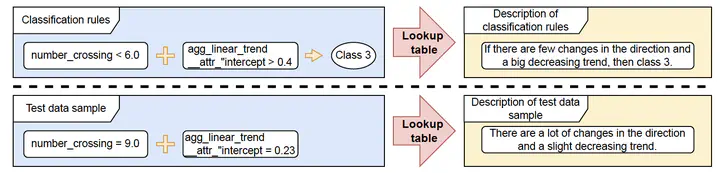

With the development of machine learning, the concept of explainability has gained increasing significance. It plays a crucial role in instilling trust among clients regarding the results generated by AI systems. Traditionally, researchers have relied on feature importance to explain why AI produces certain outcomes. However, this method has limitations. Despite the existence of documents that introduce various samples and describe formulas, comprehending the implicit meaning of these features remains challenging. As a result, establishing a clear and understandable connection between features and data can be a daunting task. In this paper, we aim to introduce a novel method for explaining time-series classification, leveraging the capabilities of ChatGPT to enhance the interpretability of results and foster a deeper understanding of feature contributions within time-series data.

Add the publication’s full text or supplementary notes here. You can use rich formatting such as including code, math, and images.